In this article I introduce the concept of the Microprocesses Architecture which addresses some important limitations of traditional monolith Case Management applications.

This article has been updated on 2018-05-21 to correct a link to a previous blog article, and on 2018-09-15 to include a link to the Process Group Instance Pattern.

You might also be interested in the following supporting pattern:

In the Oracle Integration Cloud a Case Management application consists of at least one Dynamic Process that on its turn consists of Case Activities. A Case Activity is implemented by a (structured) Process, or a Human Task. The unit of deployment is an Application, which consists of one or more Dynamic Processes plus the implementations of the activities (Processes, Human Tasks), and may also include a couple of Forms (plus some more).

The same application can have multiple revisions (versions) deployed at the same time, each having its own Revision Id. There can only be one default revision. It is important to realize that once an instance of a case is started, it stays running in the same revision. In contrast to the (on-premise) Oracle BPM Suite there (currently) is no way to move, or migrate as it is called, the instance from one revision to another. That holds for Dynamic Process as well as the implementation of its activities.

The consequence of the before is that new features and bug fixes cannot be applied to any of its running instances. Also, for some type of Case Management applications instances can run for a very long time. Think about legal cases, for example. This makes that release management of Case Management applications quickly can become a difficult job, if not a nightmare, especially when new versions of Case Management applications need to be deployed regularly.

An effective way to address this is by applying a Microprocess Architecture. As the name already suggests, it is not a coincidence that it sounds similar to a Microservice Architecture, as it addresses some of the same challenges as the Microservice Architecture, and shares some of its characteristics. As I argued some time ago in my blog article "Are MicroServices the Death of BPM and Case Management?", it is not the same though.

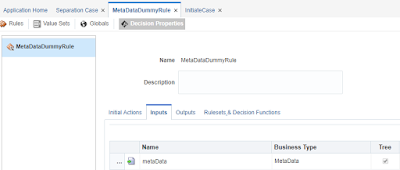

With a Microprocess Architecture you implement a case activity as a Delegate which is just a "hook" to call another Process in a different application to which the actual implementation has been delegated. The Case Management application as well as all implementing Process applications each have their own life cycle.

The advantages are:

The consequences of a Microprocess Architecture are:

Because of these two consequences (that also apply to a Microservices Architecture) one should make a conscious decision before applying a Microprocess Architecture on a Case Management application. For example, it might be less suitable for an application with short running instances or when there are no requirements to let new features and bug fixes apply to running instances.

There are a few prerequisites to a Microprocess Architecture which I will discuss in some blog(s) to come.

This article has been updated on 2018-05-21 to correct a link to a previous blog article, and on 2018-09-15 to include a link to the Process Group Instance Pattern.

You might also be interested in the following supporting pattern:

In the Oracle Integration Cloud a Case Management application consists of at least one Dynamic Process that on its turn consists of Case Activities. A Case Activity is implemented by a (structured) Process, or a Human Task. The unit of deployment is an Application, which consists of one or more Dynamic Processes plus the implementations of the activities (Processes, Human Tasks), and may also include a couple of Forms (plus some more).

The same application can have multiple revisions (versions) deployed at the same time, each having its own Revision Id. There can only be one default revision. It is important to realize that once an instance of a case is started, it stays running in the same revision. In contrast to the (on-premise) Oracle BPM Suite there (currently) is no way to move, or migrate as it is called, the instance from one revision to another. That holds for Dynamic Process as well as the implementation of its activities.

The consequence of the before is that new features and bug fixes cannot be applied to any of its running instances. Also, for some type of Case Management applications instances can run for a very long time. Think about legal cases, for example. This makes that release management of Case Management applications quickly can become a difficult job, if not a nightmare, especially when new versions of Case Management applications need to be deployed regularly.

An effective way to address this is by applying a Microprocess Architecture. As the name already suggests, it is not a coincidence that it sounds similar to a Microservice Architecture, as it addresses some of the same challenges as the Microservice Architecture, and shares some of its characteristics. As I argued some time ago in my blog article "Are MicroServices the Death of BPM and Case Management?", it is not the same though.

With a Microprocess Architecture you implement a case activity as a Delegate which is just a "hook" to call another Process in a different application to which the actual implementation has been delegated. The Case Management application as well as all implementing Process applications each have their own life cycle.

The advantages are:

- Support for parallel development of the case (with the Oracle Integration Cloud only one person can work on an application at the same time).

- Reduce impact of implementing new features and bug fixes, as these may be kept local to an application.

- Support that running instances can benefit from new features and bug fixes of the implementation of a Case Activity (as long as it has not yet reached that activity).

- Reduce the amount of revisions required for a Case Management application.

- Support reuse of the implementing Processes (which in theory also is possible with implementing Processes that reside in the Case Management application, but that's just theory 😉).

- Support that an implementing application can be deployed to a different container.

- Support that an implementing application can be (re)implemented using different technology.

The consequences of a Microprocess Architecture are:

- Every Case Activity implementation requires an extra component to be realized (Process Delegate plus implementing Process).

- It increases the amount of applications to be deployed, which to some extend is mitigated by advantage 4.

Because of these two consequences (that also apply to a Microservices Architecture) one should make a conscious decision before applying a Microprocess Architecture on a Case Management application. For example, it might be less suitable for an application with short running instances or when there are no requirements to let new features and bug fixes apply to running instances.

There are a few prerequisites to a Microprocess Architecture which I will discuss in some blog(s) to come.

Further reading: